Myself and another Freenet volunteer will be representing the Freenet Project at the Decentralized Web Summit next week. We will be giving at least one Freenet-related lightning talk, potentially more. I encourage everyone interested in Freenet, decentralization, and internet freedom to check out the decentralized web summit being held next week 2016-06-08, 2016-06-09 in San Francisco at the Internet Archive Offices. While the even Wednesday costs money, it’s my understanding that the meetup on Thursday when we’ll be giving our talk(s?) will be free admission. For anyone who cannot physically be present, I’m told there will be a live stream. Check it all out here: decentralizedweb.net

OpenBazaar Needs Freenet

Congratulations to the OpenBazaar team for their hard work and on their recent release! I firmly believe that global, decentralized, anonymous marketplaces will liberate millions. Whether it loosens the grip of a despotic state, or breaks the monopoly of a private company, OpenBazaar will help many, many people. For this reason I consider OpenBazaar’s work incredibly important, and therefore important to implement correctly. Unfortunately, the first production OpenBazaar release is conspicuously missing anonymity. Lack of anonymity severely hampers OpenBazaar’s ability to do good. Due to lack of information, I was under the mistaken impression that OpenBazaar stopped prioritizing anonymity. Recently, however, I was happy to read their recent blog post. The post enumerates five key limitations of OpenBazaar’s initial release. They address my two key concerns and three other important concerns I had not considered. Most importantly for me, they acknowledge the importance of anonymity for OpenBazaar. While I was overall encouraged, I was a bit disappointed to see IPFS favored over a project I’ve recently become passionate about: Freenet. Freenet has been battle-tested for 15 years and directly addresses three of the five limitations of OpenBazaar, and indirectly supports a robust solution for a fourth. On the other hand, IPFS is young and only addresses one of the five issues. Though IPFS is experimenting with integrating third party anonymization solutions, that only addresses two of five. Even if/when these changes are completed, I believe Freenet is a superior solution even for the two. These two are anonymity and offline storage, something Freenet is designed to do from the ground up. Freenet allows users to anonymously publish and receive pieces of static data, which fits well with OpenBazaar’s model.

Offline Stores

The OpenBazaar post correctly recognizes that many users may not want to run OpenBazaar’s server at all times. Currently, if a user turns off their OpenBazaar server their store becomes inaccessible. This problem is doubly important because I want OpenBazaar to help people worldwide, which must include people without reliable internet access. Freenet directly solves this by not storing data on the computer which publishes it. Using Freenet’s basic data insertion, OpenBazaar users could anonymously publish contracts which could be accessed even after the user went offline. When data is inserted into Freenet, it is stored on other computers according to a derived routing key. When that data is accessed, it becomes replicated across additional computers in accordance with its popularity. For this reason, data on Freenet cannot be DDoSed by repeatedly querying it. Contrast this with OpenBazaar’s current model, where identifying the correct computer to DDoS is facilitated by the network, and the data is not replicated elsewhere. IPFS does satisfactorily solve this issue, but it is still a relatively young project.

On the other hand, Freenet simultaneously solves multiple problems today, with over a decade of testing.

Anonymity

As I alluded to in the previous section, on Freenet, computers and IP addresses have nothing to do with the content they store, anonymity is built in. Freenet further improves anonymity by dividing and encrypting all data, limiting knowledge that other nodes may have about the data you are inserting or requesting. There exist (statistical) caveats to this, Freenet’s default behavior obfuscates data insertion and access quite effectively. More importantly, Freenet provides a mechanism for gaining stronger anonymity by only connecting to trusted friend nodes. By doing this, you further increase the difficulty of analyzing your traffic. The larger your network of trusted friends is, the better you are protected. In this way, privacy can scale with one’s desire for increased privacy. Freenet is also exploring a tunneling concept to provide yet another layer of protection. All said, Freenet provides adequate anonymity by default, and provides the ability to improve anonymity as needed by adding trusted friends. IPFS on the other hand does not support anonymity natively, and any support will be experimental for the foreseeable future. Further, Freenet is designed to simultaneously support anonymity and offline caching, and the two properties compliment each other. This is opposed to the way IPFS would interact with privacy layered on top. Consider that Torrenting via Tor is discouraged due to traffic requirements. Instead, popularity of a piece of data on Freenet makes its retrieval more efficient as it is replicated in more places.

Reputation

Freenet includes a third feature OpenBazaar requires in the form of an official plugin developed directly by the Freenet Project Inc. WebOfTrust is a plugin for Freenet that provides spam-resistant data publishing on the Freenet network. It accomplishes this by defining the concepts of identities, and trust between identities. An identity (optionally) lists other identities which it trusts or distrusts, and by how much. By considering all known identities, this produces a directed, weighted graph with identities as vertices, and trust relationships as edges. By traversing this graph from one identity to another, WebOfTrust calculates how much that identity trusts another. OpenBazaar can utilize this framework by setting trust based on their seller reviews. If Alice is scammed by Eve, and Alice writes a negative review, Alice’s WebOfTrust identity would indicate distrust to Eve’s identity. If Bob has positive or neutral trust to Alice, Bob would then transitively distrust Eve. Each trust relationship also may have arbitrary data attached to it, allowing a proof to be included. Furthermore, if Bob determines that Alice’s bad review of Eve is fraudulent (lacking a proof), Bob may choose to lower his trust of Alice in response. Those who trust Bob would then have lower trust of Alice, and potentially higher trust of Eve.

Search

Finally, Search can also be facilitated by the above Reputation algorithm. If a user is well trusted, and they claim a contract belongs to a particular keyword, that will be considered “correct”. If a user is not well trusted, the keyword may be ignored when searching. Conflicting keywords would be resolved by some weighted summing and a threshold value, and spamming keywords could be discouraged by automatically distrusting identities which do this (such spam would be identified manually by the user). In fact, prevention of spam like this is the core purpose of Freenet’s WebOfTrust!

Because Freenet adeptly addresses three of five significant concerns of OpenBazaar, I argue that the OpenBazaar team ought to carefully evaluate Freenet as a potential backend platform for OpenBazaar. I have a decent working knowledge of Freenet and am more than happy to lend my efforts to the OpenBazaar project (though there are far more knowledgable individuals in the Freenet project). I did not seriously pursue this earlier because I was under the impression OpenBazaar was de-prioritizing anonymity, but I am happy to find that I was very mistaken. This document will undergo further revisions, but I am going to publish it as soon as possible.

I intend to follow this post with a more concrete proposal for a Freenet backend. I also welcome any questions, comments, or corrections for this article.

P.S. Thank you to the redditors that brought the OpenBazaar blog post to my attention, and encouraged me to publish my thoughts. (And thank you to the OpenBazaar team for making the world a better place)

The original OpenBazaar blog post: Current Limitations of the OpenBazaar Software

What Is Freenet?

Freenet is our answer to oppressive governments and corporate control. Though it’s actually 15 years old, Freenet’s time has arrived, in a big way. Freenet is a computer network designed from the ground up to protect your privacy and your freedom of expression. Many people early on viewed Freenet as a tool to liberate people in oppressive regimes like China. For these people, Freenet could re-open the free flow of information by avoiding China’s restriction. Recently however, Freenet shows additional utility in sidestepping corporate censorship even in relatively free countries. We also know that American intelligence agencies are actively involved in violating individuals’ right to privacy throughout the world. These two forces threaten your privacy and freedom of expression worldwide. Freenet is our tool to resist.

To protect your privacy and ensure your freedom of expression, Freenet’s network provides a distributed data store to computers on the network. Through Freenet, you can publish a website, videos, photos, songs, documents and statistics or any other data. There are also social media applications that exist only on Freenet, so you can say what you want, and truly be yourself. When you publish on Freenet, you can choose to keep your identity secret, and you can’t be censored. Freenet protects your privacy by anonymizing your requests for data on the Freenet network, and prevents censorship by storing data redundantly on different computers around the network.

Since Freenet is functionally a massive data storage system, Freenet supports two basic operations: “insert” and “fetch” data. When you insert a file into Freenet, Freenet encrypts it, chops it up into smaller pieces, and stores these pieces throughout the network. If you insert data into Freenet, and share a link with your friends, they are able to access that content even if your computer is off. In this way, publishing content is as simple as sharing a link! Since there are no servers to attack, and you are anonymous except if you choose not to be, you can express yourself freely! When you fetch a file from Freenet, your Freenet client software asks other computers on the network for the pieces it needs. The encrypted chunks of files cannot be decrypted by people who don’t have the link, so computers storing those chunks don’t really know what data they’re storing. This also means that when your computer asks another computer for a piece of data, the other computer doesn’t know exactly what data you’re retrieving unless they also have the link to it. The fact that your computer could end up temporarily storing content an encrypted copy of data you find offensive may make some people understandably uncomfortable.

Though all of us wish to express ourselves freely, we may find other people’s expression to be unacceptable. The price of our own free expression is accepting others’ free expression. Freenet does not allow you to censor other people’s expression with the benefit that they cannot censor yours. From a technical standpoint, either it is possible to censor everything, or it is not possible to censor anything. We aren’t able to pick and choose, without also allowing others to censor us. While the rest of the world errs on the side of limiting free expression, Freenet makes the opposite decision, to err on the side of free expression. In future articles I will address these moral and practical concerns, and detail why I accept this, and why I think you should too.

Freenet unites anti-censorship properties, strong privacy, and decentralization, to foster human liberation. For 15 years the Freenet project has developed and deployed software to support these goals. Now more so than 15 years ago, the world desperately needs forces for anti-censorship, strong privacy, and decentralization. I encourage you to join Freenet today, participate in its communities, and say “No!” to censorship. If you’re able, I also ask you to contribute to the project. Freenet is driven by volunteer effort and community contributions. You may imagine that I mean you should write code or donate money. I do suggest you do these things if you are able. Freenet Project Inc. is a 501(c)(3) non-profit organization so monetary donations are tax-deductable, but there exist many, many other opportunities to help. Simply by filing bug reports, writing documentation, helping others troubleshoot the software, inviting friends, or even just by participating in the network you fight to liberate yourself, and people around the world. If we wait to embrace Freenet until after we need it to escape an Orwellian dystopia, it will be far too late. It’s imperative that we make free communication ubiquitous long before we need it.

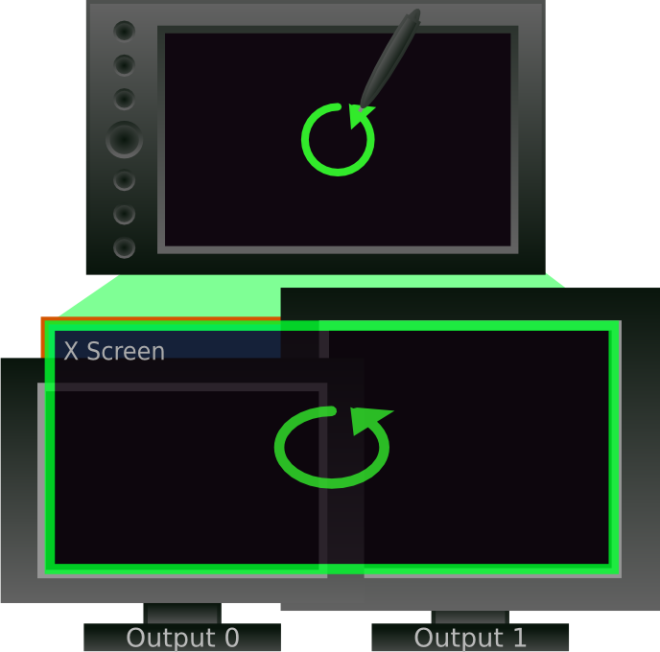

Inexpensive Monoprice Tablet

Back in April of 2014 I bought myself a birthday gift, a $50 digital drawing tablet from Monoprice. I read reviews stating that it worked well, and noted that Linux drivers existed thanks to the excellent DIGImend Project(old wiki here). The tablet as I later learned, is a rebranded Huion h610, and seems relatively well regarded. Compared to similarly priced Wacom tablets it was a great value. When it arrived, I expected to do some tinkering to get it working, but six months later I realized the magnitude of this undertaking. Following months of hard work attempting to get my Monoprice graphics tablet working well on Ubuntu 12.04, I had it mostly succeeded. (A dramatic recounting of this story will be told another time) Unfortunately for me, a bug in the X.org version 12.04 included forced me to either upgrade to a newer release or slowly descend into the hell that is maintaining complex software and its dependencies outside the package manager. Because I’m lazy I chose to upgrade to 14.04. The tablet is now reporting motion events in its full native resolution, pressure events are mostly correct, and even the buttons work. Unfortunately, though the key IDs reported are mind bogglingly stupid, such as Alt+F4, Ctrl+G, Ctrl+C with predictably annoying effects. I intend to address these issues in the coming months, but for the moment, I want to ensure the main feature, the pen and tablet, are functioning correctly. Before I dive into the problem and how I solved it, I want to explain the XRandR multi-monitor model. xrestrict itself directly mentions CRTCs and Screens, rather than intuitive end-user concepts like “Monitors” so it is useful to understand them.

XRandR Model

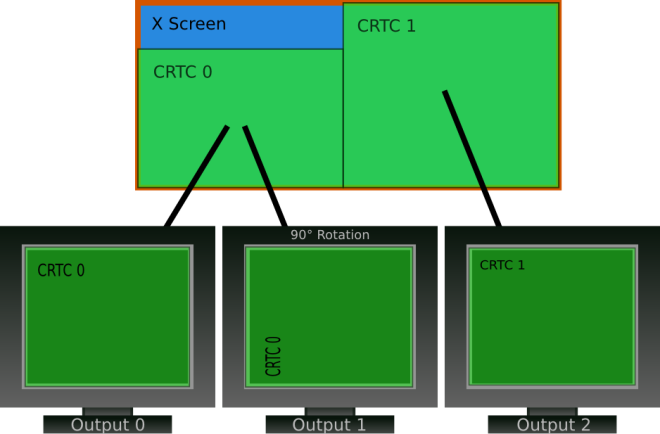

XRandR setups typically have a single object called a Screen, which is a virtual rectangular region in which windows exist. X11 may have multiple Screen objects, but long time X users will note that windows may not cross from one Screen to another, limiting the usefulness of having multiple X Screens. As a result, modern X11 configurations have this single virtual Screen. The Screen is then divided into one or more rectangular regions called CRTCs. These CRTCs are then displayed on zero or more outputs, which represent connections to monitors and may be rotated or scaled to display as the user desires.

XRandR’s model of the world.

Back to the Tablet

I was very nearly satisfied with my tablet’s functionality, with one significant caveat due to my multi-monitor setup. If I positioned my pen in the lower left of my tablet, the cursor appeared in the lower left corner of my left monitor. If I positioned my pen in the upper right of my tablet, the cursor appeared in the upper right of my right monitor. This is the obvious behavior for the tablet, and I initially didn’t think anything of it. There was one problem however, if I drew a circle on the tablet, it appeared as a wide oval on screen. The issue was in how tablet coordinates were being translated to screen coordinates. In the X axis, coordinates ranged from 0 to 40,000, which were then mapped to the entire width of my X screen. As I have a dual monitor setup, the width of my X screen is actually the sum of the width of my two monitors: 3280 pixels. In the Y axis however, 25,000 units on my tablet, was mapped to the y axis of my screen: 1050 pixels. The aspect ratio of my X screen was approximately 3:1, while the aspect ratio of my tablet is 16:10. Directly mapping one to the other without regard for aspect ratio produced a distortion of nearly 2:1 from tablet coordinates to on-screen coordinates. This distortion is why a perfect circle drawn on the tablet, even with a guide, appeared as an oval on screen.

To remedy this I needed to provide a different mapping. Handily, XInput2 devices provide just the thing, the “Coordinate Transformation Matrix” which alters the pointer coordinates. If you alter this “Coordinate Transformation Matrix”, you can introduce scaling, skew, rotation and translation to the coordinates calculated by X.org. I initially calculated a “Coordinate Transformation Matrix” using a simple Python script, to restrict the pointer to my right screen. This worked, though I found I still had significant difficulty drawing. I attribute this to the “Coordinate Transformation Matrix” still being imperfect. It mapped tablet coordinates 40000×25000 to my right screen which is 1600×900. Though much closer to the correct aspect ratio, it still caused approximately an 11% distortion, which I believe to be significant.

Default Mapping of Pointer Coordinates

Because I have two monitors, stretching the tablet’s limited resolution over my entire screen seemed wasteful, as it would reduce effective resolution in the X axis by half. Furthermore, regardless of 12.2x greater precision than the screen, I wasn’t certain of the accuracy of the tablet, and the jagged lines I was drawing made me wonder if the tablet’s position readings might be noisy/jittery. If this was the case, then stretching the axes two times would double the size of these inaccuracies (not to mention any inaccuracies my unskilled hand might have). So I decided that I should instead restrict the tablet’s range to simply cover one of my monitors. But this introduced the problem that should I ever want to switch monitors, it would be quite a pain (the name xrestrict describes this behavior, though it may be used to cover an area larger than the X Screen now). I would have to recalculate this matrix, which I had by now realized was more complicated than simply chopping the screen up. I could rather trivially calculate these two matrices myself and store them somewhere, but it occurred to me that others may have similar issues, and resolved to make a reusable utility. This decision was re-enforced by the thought that this problem likely affects more people than myself. In fact, my hasty dump to github and this blog post are a direct result of discovering others with similar problems to mine, and a hope that xrestrict could help them.

Enter xrestrict

xrestrict‘s core functionality is to calculate the correct “Coordinate Transformation Matrix” to map a tablet device’s effective area to a portion of your screen without distortion. Originally, xrestrict needed to be told the XID of the pointer device. From there it discovers the X and Y axis bounds of that pointer device. It also discovers the size of the X Screen, which may be larger than any single monitor on multi-monitor systems. In fact, typically it is large enough to contain all monitors side by side, except when monitors are mirrored. I want to ensure it’s useful for as many people as possible, regardless of linux experience.

Basic Usage

The current recommended usage is to invoke

xrestrict -I This will prompt you to use your tablet device to select the CRTC you wish to restrict your tablet to. Use your tablet cursor to click somewhere on the screen on the monitor you want to use.

Alternatively, if you want your tablet to be able to access all monitors, but you want to correct the aspect ratio, you can invoke

xrestrict -I --full This will “restrict” you tablet to the entire virtual X Screen. The only downside to this is, unless your tablet’s aspect ratio matches your Screen’s aspect (in which case you never needed xrestrict to begin with!) some portion of your tablet’s surface will be mapped to portions of the Screen which are not displayed on any monitor. This means that portions of your tablet will be effectively useless. If you want to avoid this there are alternative options to control how your tablet’s surface is mapped. View xrestrict’s usage for more information.

Pending Problems

Due to feature “creep”, xrestrict is no longer a descriptive name, as it does more than restrict a tablet device to a particular monitor. I’d hate to keep a non-descriptive name like that for historical reasons when that “history” is only a few weeks old. The hid-huion drivers also report having an X rotational axis and a Z axis, which causes a small amount of confusion for X clients. Gimp, for instance, seems blissfully unaware that X tells it specifically what axis is the pressure axis, and instead uses a numbering scheme. This scheme causes one of the mis-reported axes (Z if I remember correctly) to be mapped to pressure for Gimp’s paint tool by default. I suppose this is a Gimp bug as the information describing the type of each axis is available, but my tablet also shouldn’t be reporting it has axes it actually doesn’t. For the time being I’ve worked around this by configuring the tablet in Gimp, which allowed me to easily remap the correct axis to pressure. At some point I may explore correcting this in the kernel driver, but I’m not particularly comfortable making modifications which “work for me” on my hardware, on a driver which is intended to be generic for Huion tablets. I don’t have the hardware to test it on all possible combinations, though perhaps through the DIGImend project I can get some regression testing.

The hid-huion drivers I modified still report painfully stupid buttons. I can either remap them in X.org, or attempt to remap them using udev’s hwdb. For now I’m planning to use hwdb so it can potentially be compatible with Wayland in the future. My tablet appears as three identically named devices in X.org, which makes it difficult for the user to identify the correct device id at a glance. For this reason, requiring the user to find the XID of their tablet is a poor user interface (even for a command line application!). Between my initial draft of this article and its publishing, I’ve added the “interactive” mode to xrestrict, which eliminates the need to discover the XID manually. Another user friendly feature I want is to provide a persistent identifier to the user’s tablet device. By providing the identifier, the user could for instance use interactive discovery once to determine their tablet, and from then on have a small button which invokes xrestrict --device-id-file=~/.config/xrestrict/mytablet which automagically restricts their tablet to CRTC 0.

Links

Intent To Update

Hi Everyone,

It’s been an absurdly long time since the last time I updated this blog. For the moment life has allowed me some free time, some of which I want to devote to blogging. Since my last blog post almost three years ago I fell down the rabbit hole of 3D Printing, Electronic Engineering, and Robotics, all of which I want to share. With the remainder of my free time I’ll be working on software, hopefully some of which I can contribute to the world. Whenever I do that I intend to provide writeups here for documentation and entertainment purposes. It’s good to be back.

Thanks,

Dan

Expect this Inquisition!

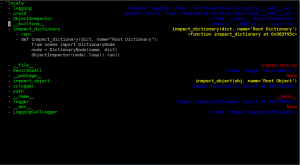

One of the things I’ve been working on lately, aside from my resumé and everything else, is a little object inspector for Python objects. I plan on using it to debug PyPy translation test failures, as the object trees are often complex, and I think this will help me.

I’ve put the code up on github at http://github.com/Ademan/Inquisition the basic usage is to call inspect_object(my_object) or inspect_dictionary(my_dict) to inspect either an object or dictionary. This specifies a root node to start browsing at. You’ll need a bleeding edge version of urwid that has the treetools module

Pythonic Pumpkin Carvings

PyPy and the JVM

I admit I’ve been lazy lately, but I have been working on a few things, the most interesting of which, is translating PyPy to JVM. Antonio Cuni did a lot of work getting PyPy to translate to the .NET platform, and to allow JIT generation for that platform. As a mixed blessing, his code has remained in a branch that is massively out of sync with trunk (here). The good news is this allowed me to very quickly revive JVM support in his branch, the patch is somewhere on paste.pocoo.org, I’ll track it down later. Antonio expressed to me that he would like to port his changes by hand to trunk, rather than attempting any sort of merge. Because of that, I’ve focused my attention on trunk (here). The PyPy translator is extremely powerful, and consequently, extremely complex. I feel I’ve wrapped my head around a significant portion of it, and I was able to address one major issue preventing translating trunk to .NET and the JVM. There’s plenty more to go, but I’m still working.

Micronumpy

I’ve slowed down a lot, but I recently added the support code for array broadcasting, which is essential for proper handling of arrays, after that, there should be a solid enough foundation to implement most of NumPy in pure python on top of micronumpy arrays, and through profiling, implement some in RPython. I may try my hand at improving performance before I finish broadcasting support, I’m not sure.

All Hallows Eve

Not that this excuses my sloth, but here’s what I carved today :-). I’d never tried to carve a pumpkin to be semi-transparent before, but it turned out quite well actually, better than my pumpkins usually do…

Performance Update

As promised, I haven’t just dropped micronumpy, I’m continuing to work on it. As of September 10th, 2010 micronumpy takes 1.28736s per iteration over the convolve benchmark, and NumPy on CPython takes 1.87520s per iteration. This is about a 31.3% speedup over NumPy, I didn’t record the exact numbers near the end of the SoC but I believe I’ve made things slower still… On the bright side, I’m passing more tests than ever, and support slicing correctly. On the downside, I have no idea why it’s slower, I eliminated a whole loop from the calculation, I expected at least a moderate gain in performance… I’m investigating now, so I’m keeping this short.

Dan

When All is Said and Done

In the Beginning

Back when I was young and naïve at the beginning of the summer, I proposed to continue the work that a few PyPy developers and I had worked on, a reimplementation of NumPy in RPython. The project holds a lot of promise, as PyPy can generate a JIT compiler for itself and its components written in RPython. With a NumPy array written in RPython, the PyPy JIT can see inside of it and from that can make far more optimizations than it could otherwise. Since the PyPy JIT is especially good at optimizing CPU/computationally expensive code, bringing the two together could go a long way to bridge the gap between Python performance, and statically compiled languages.

As luck would have it, my project was categorized by the Python Software Foundation as a NumPy project, rather than a PyPy project, whose developers I’d been bugging and asking questions for some time. I soon came into contact with Stéfan van der Walt, a member of the NumPy Steering Committee. After consulting with him and the NumPy mailing list, it was decided that most people would not find a super fast NumPy array very useful by itself. For it to matter to most people, it would need to be able to do everything that the existing NumPy array does, and someone brought up the point that there is a great deal of C and Cython code already written which interacts with NumPy arrays, and it’s important that my project would allow these things.

So my project ballooned to a huge size, and I thought I could handle it all. The new burden of full compatibility was to be attacked by porting NumPy to PyPy and providing an easy interface for switching to and from NumPy and micronumpy arrays. Unfortunately, this pursuit wasn’t very fruitful, as PyPy’s CPyExt isn’t yet equipped to handle the demands of a module as all encompassing as NumPy. I spent a fair amount of time simply implementing symbols to satisfy the dependencies of NumPy. I made some significant changes to NumPy which are currently sitting in my git repository on github. I don’t know what the future holds for them unfortunately (If the NumPy refactor is completed soon enough, I may be able to sidestep CPyExt which will be faster anyways).

Midterms

Around midterms I had micronumpy arrays working reasonably well enough that they could run the convolve benchmark, and handily beat NumPy arrays (twice as fast is fairly impressive). However, the point is to demonstrate that the JIT can speed up code to near compiled code, theoretically removing the need to rewrite large portions of python code in C or Cython. By this time, it was becoming clear that getting NumPy to work with PyPy was not going to happen over the summer. I’ve adjusted my expectations, NumPy working on PyPy is still on my TODO list but won’t be completed this summer. This might be for the better anyways, as NumPy is being refactored to be less Python (and therefore CPython) centric, as a result, in the near future I may be able to completely avoid CPyExt and use RPython’s foreign function interface to call NumPy code directly.

The Final Stretch

One of the beautiful things about PyPy’s JIT is that it’s generated, not hard coded, so I didn’t have do to anything in order to have micronumpy be JITed. Unfortunately, in the past three days or so, I’ve discovered that my code no longer works with the JIT. I’ve done all I can to figure out what’s wrong, and I can’t fix it on my own. Diving into the JIT in the last 24 hours of the summer of code surely won’t bear any fruit. I’ve put up my distress signal on the mailing list, and hopefully this issue can be resolved in time to provide some awesome benchmark results. If not, at least I can get this resolved in the next couple of weeks and then move on to the other things I want to fix.

EDIT: Thanks to the help of the core PyPy developers we determined that the problem was that arrays allocated with the flavor “raw” were being accepted. Apparently these arrays still have length fields, by using rffi.CArray I was able to instruct PyPy to construct an array without a stored length field.

I’d also like to add that in the final hours, we added support for the NumPy __array_interface__ so that as soon as NumPy is working on PyPy, NumPy can take micronumpy arrays and do all sorts of useful things with them, and then when you need speed for simpler operations, you can pass your NumPy arrays to micronumpy (this side of the transaction hasn’t been implemented yet).

The End

So here we are at the end of the Summer of Code, and my project isn’t where I wanted it to be. Specifically, given my addition of slice support, performance has dropped to around 50% faster than NumPy, even farther from my goal, so that’s my top priority to address in the coming weeks. In my previous blog post I outlined what my plans are for the future (as I don’t like leaving things undone). Basically it comes down to:

- Optimizing!

- Minor compatibility fixes

- Bridging NumPy and PyPy completely

Thanks

I’d just like to thank Google very quickly, and specifically Carol Smith, who has done a great job of managing the Google Summer of Code this year. I thoroughly enjoyed the program, and would love to do it again given the chance. I’ve learned a lot about writing software, dealing with deadlines, and time management (which is a skill I’ve let atrophy…) this summer. And thanks to you who’ve taken interest in my project. If you want to check back occasionally, the summer may be over, but my project isn’t, and I’ll be sure to brag about benchmark results as soon as they’re more favorable :-).

I’d also like to thank my mentor, Stéfan van der Walt for his help throughout my project, for being supportive and understanding when unexpected problems occurred and set us back. And I’d like to thank Maciej FijaŃkowski for his support from the PyPy side. The rest of the PyPy developers have all been helpful at some point or another, so thanks to them too.

The End is Nigh!

Repent!

We’re already past the suggested pencils down date for the Google Summer of Code, and I’m certainly paying my penance for my previous sloth. Just last night I got the test suite passing again, after several hours of hacking. Advanced indexing is nearly done, which is wonderful. I currently have one slice-related issue, which I’ll hopefully be resolving in the next couple of hours.

The Next Week

As soon as I have this indexing done, it’ll be time to optimize. Maciej was kind enough to show me how to get tracing information, so that I can produce the most JIT friendly code I can. I probably will spend the next five days working on tuning that. The original goal, of course, was to be near Cython speeds using the JIT, and we were nowhere near that on the first pass (though twice as fast as CPython and normal NumPy). Unfortunately, with the addition of the advanced slicing, I may have made Cython speeds harder to achieve. Hopefully the JIT will be ok with my first pass with slicing, however I’m prepared to revert to “dumb slicing” for the end of the GSoC and resume advanced indexing support after it is over. I’d feel bad about that though, as that’s been my major stumbling block these past weeks. In the next few hours I need to make sure everything translates so that I have something to show to Stéfan tonight.

Beyond

My biggest regret from the summer of code, is that I haven’t succeeded in porting NumPy to PyPy. This is something I hope to address in my free time this coming semester. This will require extensive work on CPyExt which is a complicated beast.

Additionally, I want to make sure that micronumpy is as useful as it can be, and that’s something that should be pretty easily accomplished in my free time. This will include implementing basic math operations, and some ufuncs. I may make a first pass at everything with naïve implementations written in applevel Python, then progressively optimize things. Depending on the progress for the refactoring of NumPy, I might be able to plug in some NumPy code for fast implementations of some things which would be great.

Dan